FAQ

Frequently asked questions about the Tester Community.

How are tester results evaluated?

Rainforest recruits at least 2 testers for every test. Each tester’s actions, such as mouse activity, time spent on each step, and correct site navigation, are monitored by our system. Moreover, they are compared to one another to ensure consistency. Our algorithm looks for 2 matching results or 3 conflicting failures.

- 2 Matching Results. 2 passes or 2 failures are known as a consensus result. When two testers successfully execute a step in a browser and answer with a yes, the step passed. If two testers fail the same step by answering no, the step—and the test—are marked as “Not Passed/Failed” for that browser.

- 3 Conflicting Failures. If 3 testers fail a test at 3 different steps, the test fails.

Surfacing 2 matching results or 3 conflicting failures.

How are Acceptance and Rejection determined?

Based on our algorithm and overall evaluation of a result, Rainforest either accepts or rejects each tester’s test results. This is how we determine our confidence in the result’s accuracy.

- Results determined to be correct are accepted and taken into account when reporting the final state of the test (Pass/Fail) to our users.

- Results that cannot be passed with confidence are rejected. These results are not considered in the final (Pass/Fail) determination of the test itself. However, they are displayed for you to evaluate.

Results can be rejected for the following reasons:

- The tester’s results do not agree with the other testers.

- The tester completed the test too quickly for us to be confident in the result.

- The tester did not navigate to the URL defined in the test steps.

- A Rainforest admin rejected the tester’s results after reviewing their work.

- The tester failed to perform the expected actions, such as clicks, scrolls, and mouse movements, over several steps.

- The tester failed a built-in quality control test.

How are tests sent to testers?

Testers receive tests to complete via a Tester Chrome extension. Once they download the extension, they can enable it to receive tests.

How many testers are assigned to each test?

A minimum of 2 testers are assigned to each test per browser. Suppose our algorithm determines that additional testers are required to reach a consensus, or testers abandon a test in progress for whatever reason. In that case, we recruit more testers to deliver a reliable result.

How do testers handle pop-ups?

Often, customers build pop-up modals for their site, which appear in different places. In Rainforest, pop-ups are divided into three groups:

- If a pop-up indicates a bug, the tester fails the test.

- If a pop-up doesn’t indicate a bug, the tester continues testing.

- If it’s not apparent whether a pop-up indicates a bug, the tester continues testing.

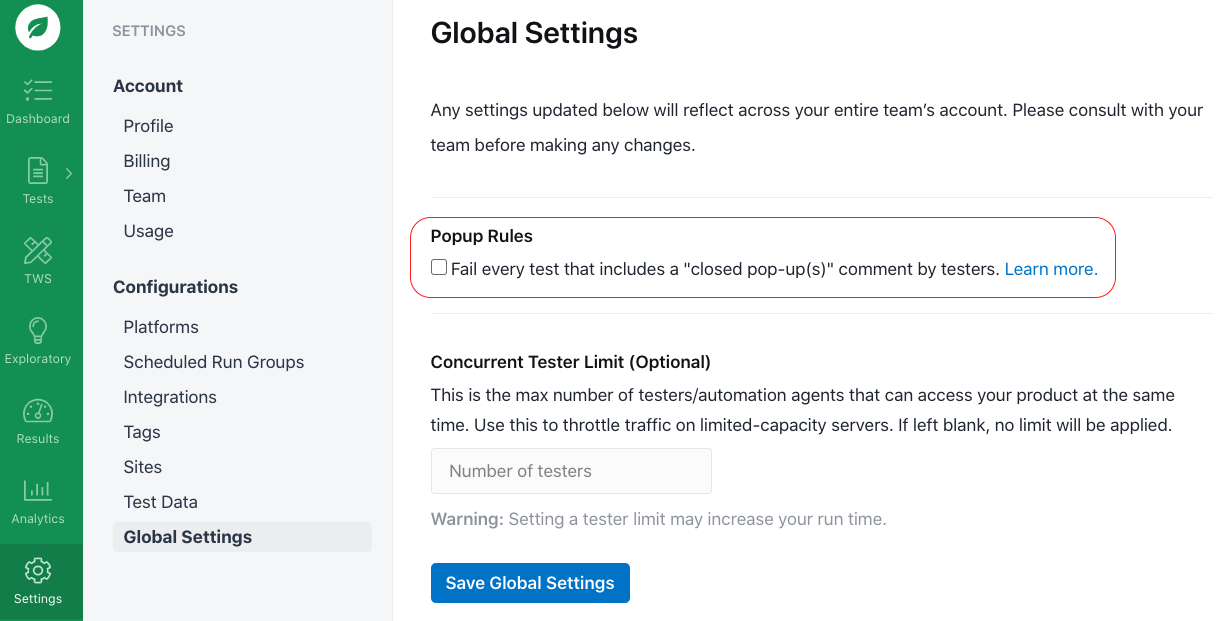

You can choose how to handle pop-ups in the third group. Set the Rainforest system to either fail or not fail the test as a result. The default is to not fail the step. To change this behavior, see the Global Settings page.

Toggling the pop-up rules.

What safeguards do you provide to regulate tester behavior?

Whenever you open up your unreleased software to the outside world, you introduce some risk. As with most things in Rainforest, it’s worth weighing your exposure against the effort required to mitigate that risk, such as performing the testing yourself.

Most of our testers make their living with Rainforest. We have thousands of workers who rely on us as a source of income. As a result, they do not treat this relationship lightly. They work diligently to perform their job well. We have a single crowd of testers to serve many users. Each tester sees multiple websites and apps during a testing session. It’s worth considering this when assessing the level of risk.

Typically, most of our users test on a staging server minutes before the code is shipped to production and exposed to the public. If this is your use case, there’s not much a tester can do that a regular user cannot do once the code is live. That said, here are some things to keep in mind.

Data Sanitization

As the customer, it is your responsibility to ensure that all data provided to testers has been sanitized and all Personal Identifiable Information (PII) removed or obscured.

Effective staging and QA environments mirror production as closely as possible. If this sounds scary, our CTO’s blog post on Optimal Environment Setup is well worth a read.

Part of your preparation is ensuring you have test data that is similar to what’s in production. Credit card numbers, personal email addresses, phone numbers, and the like should be obscured. It goes without saying that you should not give testers access to shared logins for critical parts of your application.

Custom NDAs

All Rainforest testers must sign a non-disclosure agreement (NDA) with Rainforest before they can test with us. As testers learn about customers’ products through testing, our NDA ensures the information is not shared.

In addition to the NDA, you can request testers to sign your custom NDA before they’re allowed to access your app. In this case, all jobs for your account are performed by testers who have signed your NDA. To make a custom NDA request, reach out to your CSM.

Malware Scans

If a tester elects to receive jobs that require HIPAA compliance, they must provide malware scans from the systems they use to access our platform. They upload the scan results to their portal profile. Note that this is not a requirement for all testers—only those who want to receive HIPAA-compliant jobs.

Two-Factor Authentication

We provide the support needed for testers to secure their accounts using two-factor authentication (2FA), which you can require. This helps us ensure verified tester accounts remain secure.

How can I submit tester feedback?

The Rainforest Tester Rating System allows you to provide feedback on how a tester performed in any step of a completed test. You can give a thumbs-up or thumbs-down. Moreover, you can add feedback about your rating.

Rainforest uses your feedback to quickly identify issues that affect test quality. Upon reviewing the data collected, Rainforest proactively improves the quality of results by identifying areas for further training and instances of tester inconsistency.

Providing feedback on a test.

Thumbs-up is the best possible rating; it indicates outstanding performance. Usually, good ratings are the result of a tester providing helpful comments, possibly uncovering a previously unknown bug.

Conversely, a thumbs-down rating usually indicates that the tester didn’t provide any comments or performed poorly overall.

If you have any questions, reach out to us at [email protected].

Updated 6 months ago